- See Full List On Docs.docker.com

- Container Exec Die Kubernetes In Docker Tutorial

- Container Exec Die Kubernetes In Docker Online

Another installment of me figuring out more of kubernetes.

- If you’re working with docker-compose or Docker stack, check out this article first. If you haven’t checked it yet, this might be a first hint. You can either try to run your container without the -d flag, or check the exit code of a stopped container with $ docker ps -a and finding the most recent one in the output.

- Nginx container not restarting after shutdown in Kubernetes. 26th March 2021 docker, google-kubernetes-engine, kubernetes, nginx, nginx-ingress. We’ve encountered an issue with our deployment on Kubernetes, recently. It would seem that, randomly, our NGINX front end containers, that serve our Front End application, seemingly die.

Full code available at meain/s3-mounter

So, I was working on a project which will let people login to a web service and spin up a coding env with prepopulateddata and creds. We were spinning up kube pods for each user.

See Full List On Docs.docker.com

4713 5710 sunbeam-oster manual. All of our data is in s3 buckets, so it would have been really easy if could just mount s3 buckets in the dockercontainer. My initial thought was that there would be some PV which I could use, but it can't be that simple right.

Kubectl get pods -n. Then find the relevant container from the list and login into it with: kubectl exec -stdin -tty - /bin/bash. If you don’t run Kubernetes but just docker use: docker exec -it containerid /bin/bash. Download.net core SDK: Now we will need diagnostic tools and for the installation of these. Feb 15, 2019 Moby added an execdie container event a little over a year ago in f97256cbf1 via PR35744 (cherry-picked to docker-ce 18.03 via 27f91f80b9). The event reports a docker exec exit code, including HEALTHCHECK executions, and denotes that the executed command has completed. Portainer currently shows this as an Unsupported Event.

So after some hunting, I thought I would just mount the s3 bucket as a volume in the pod. I was not sure if this was theright way to go, but I thought I would go with this anyways.

I found this repo s3fs-fuse/s3fs-fuse which will let you mount s3.Tried it out in my local and it seemed to work pretty well. Could not get it to work in a docker container initially butfigured out that I just had to give the container extra privileges.

Container Exec Die Kubernetes In Docker Tutorial

Adding --privileged to the docker command takes care of that.

Now to actually get it running on k8s.

Step 1: Create Docker image #

This was relatively straight foreward, all I needed to do was to pull an alpine image and installings3fs-fuse/s3fs-fuse on to it.

Just build the following container and push it to your container.I have published this image on my Dockerhub. You can use that if you want.

Step 2: Create ConfigMap #

The Dockerfile does not really contain any specific items like bucket name or key. Here we use a ConfigMap to injectvalues into the docker container. A sample ConfigMap will look something like this.

Replace the empty values with your specific data.Afer that just k apply -f configmap.yaml

configmap.yaml

Step 3: Create DaemonSet #

Well we could technically just have this mounting in each container, but this is a better way to go.What we are doing is that we mount s3 to the container but the folder that we mount to, is mapped to host machine.

With this, we will easily be able to get the folder from the host machine in any other container just as if we aremounting a normal fs.

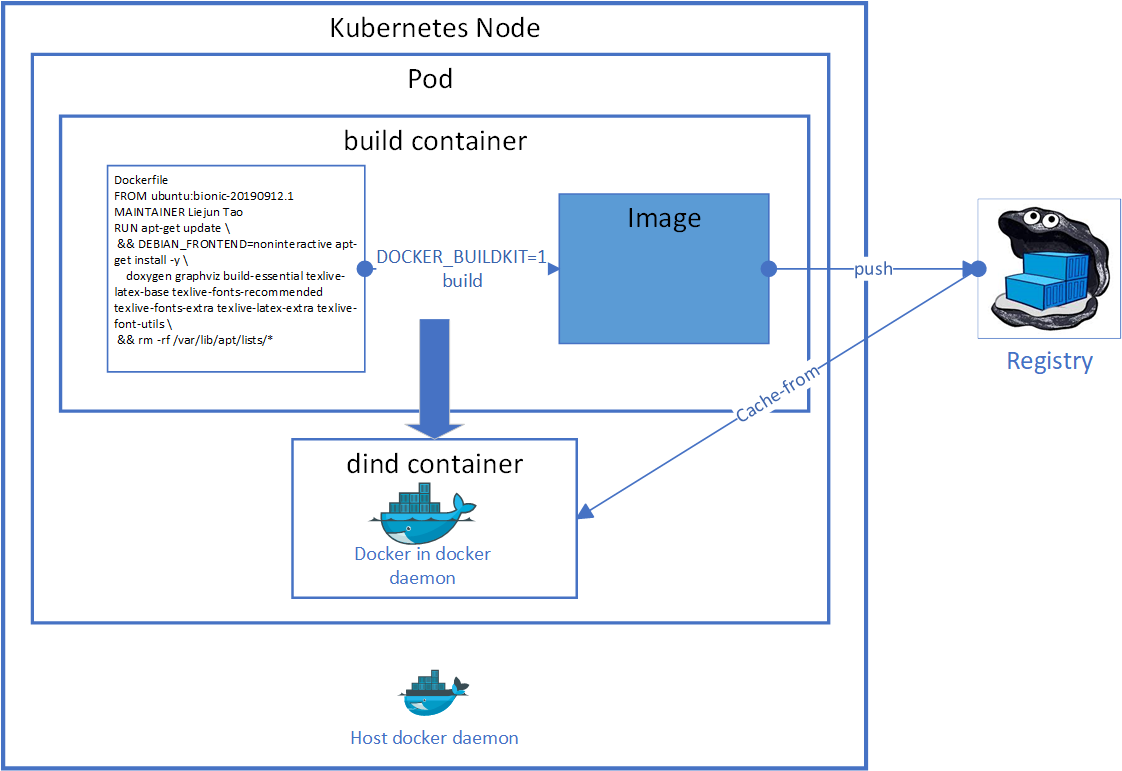

The visualisation from freegroup/kube-s3 makes it pretty clear.

Container Exec Die Kubernetes In Docker Online

Since every pod expects the item to be available in the host fs, we need to make sure all host VMs do have the folder. ADaemonSet will let us do that. A DaemonSet pretty much ensures that one of this container will be run on every nodewhich you specify. In our case, we ask it to run on all nodes.

Once ready k apply -f daemonset.yaml.

If you check the file, you can see that we are mapping /var/s3fs to /mnt/s3data on host

daemonset.yaml

If you are using GKE and using Container-Optimized OS,/mnt will not be writeable, use /home/s3data instead

With that applied you will have :

By now, you should have the host system with s3 mounted on /mnt/s3data.You can check that by running the command k exec -it s3-provider-psp9v -- ls /var/s3fs

Step 4: Running your actual container #

With all that setup, now you are ready to go in and actually do what you started out to do. I will show a really simplepod spec.

Aiptek slim tablet 600u drivers. pod.yaml

Run this and if you check in /var/s3fs, you can see the same files you have in your s3 bucket.

Change mountPath to change where it gets mounted to. Change hostPath.path to a subdir if you only want to expose onspecific folder